Dennis Wood

Senior Member

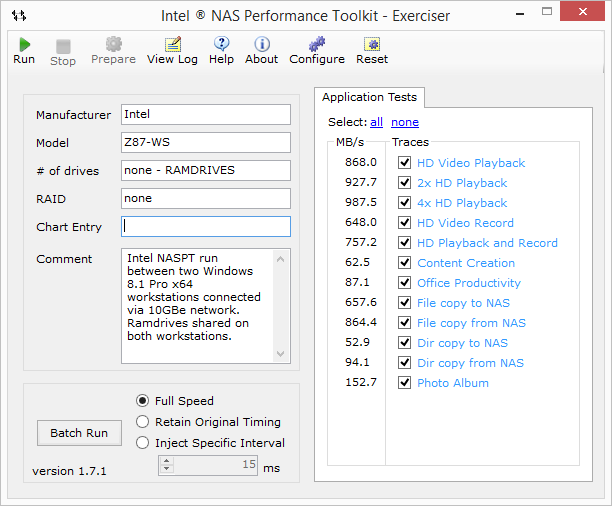

Thanks T. The last part of the series is an affordable 10GbE workstation and a $2500, 1000MB/s, 20TB server build...my favorite part of the series. Leaving the best for last

Cheers,

Dennis.

Cheers,

Dennis.

3.1. Single RSS-capable NIC

This typical configuration involves an SMB client and SMB Server configured with a single 10GbE NIC. Without SMB multichannel, if there is only one SMB session established, SMB uses a single TCP/IP connection, which naturally gets affinitized with a single CPU core. If lots of small IOs are performed, it’s possible for that core to become a performance bottleneck.

Most NICs today offer a capability called Receive Side Scaling (RSS), which allows multiple connections to be spread across multiple CPU cores automatically. However, when using a single connection, RSS cannot help.

With SMB Multichannel, if the NIC is RSS-capable, SMB will create multiple TCP/IP connections for that single session, avoiding a potential bottleneck on a single CPU core when lots of small IOs are required.

3.2. Multiple NICs

When using multiple NICs without SMB multichannel, if there is only one SMB session established, SMB creates a single TCP/IP connection using only one of the many NICs available. In this case, not only it’s not possible to aggregate the bandwidth of the multiple NICs (achieve 2Gbps when using two 1GbE NICs, for instance), but there is a potential for failure if the specific NIC chosen is somehow disconnected or disabled.

With Multichannel, SMB will create multiple TCP/IP connections for that single session (at least one per interface or more if they are RSS-capable). This allows SMB to use the combined NIC bandwidth available and makes it possible for the SMB client to continue to work uninterrupted if a NIC fails.

6. Number of SMB Connections per Interface

SMB Multichannel will use a different number of connections depending on the type of interface:

For RSS-capable interfaces, 4 TCP/IP connections per interface are used

For RDMA-capable interfaces, 2 RDMA connections per interface are used

For all other interfaces, 1 TCP/IP connection per interface is used

There is also a limit of 8 connections total per client/server pair which will limit the number connections per interface.

For instance, if you have 3 RSS-capable interfaces, you will end up with 3 connections on the first, 3 connections on the second and 2 connections on the third interface.

We recommend that you keep the default settings for SMB Multichannel. However, those parameters can be adjusted.

6.1. Total Connections per client/server pair

You can configure the maximum total number of connections per client/server pair using:

Set-SmbClientConfiguration –MaximumConnectionCountPerServer <n>

6.2. Connections per RSS-capable NIC

You can configure the number SMB Multichannel connections per RSS-capable network interface using the PowerShell cmdlet:

Set-SmbClientConfiguration -ConnectionCountPerRssNetworkInterface <n>

Right now, Samba 4.0 negotiates to SMB 3.0 by default and supports the mandatory features, including the new authentication/encryption process. Over time, the Samba team will add additional features, with SMB Direct (SMB over RDMA like InfiniBand) already in testing.

The foundation for directory leases is there, but advanced and specialized features like multi-channel, transparent failover, and VSS support might not ever come. Since Samba has long supported their own type of clustered file servers, Windows scale-out file server support does not exist in Samba at this point.

I've sent in part 6...

I elected not to publish one of the installments on SmallNetBuilder. So Dennis' number references are off for the SNB articles. "Part 5" refers to the SMB3 installment (our Part 4).In vain have I searched for part 5....

Welcome To SNBForums

SNBForums is a community for anyone who wants to learn about or discuss the latest in wireless routers, network storage and the ins and outs of building and maintaining a small network.

If you'd like to post a question, simply register and have at it!

While you're at it, please check out SmallNetBuilder for product reviews and our famous Router Charts, Ranker and plenty more!