Tech Junky

Part of the Furniture

So, I found something interesting when it comes to higher capacity NVME / PCIE drives that has me scratching my head as to why technophiles are giving their money to companies for M2 based options when they can get double the capacity for the same price in some cases.

It doesn't seem like a difficult proposition depending on how you want to do things. There's the PCIE card adapters and cages for 5.25" holes in the case.

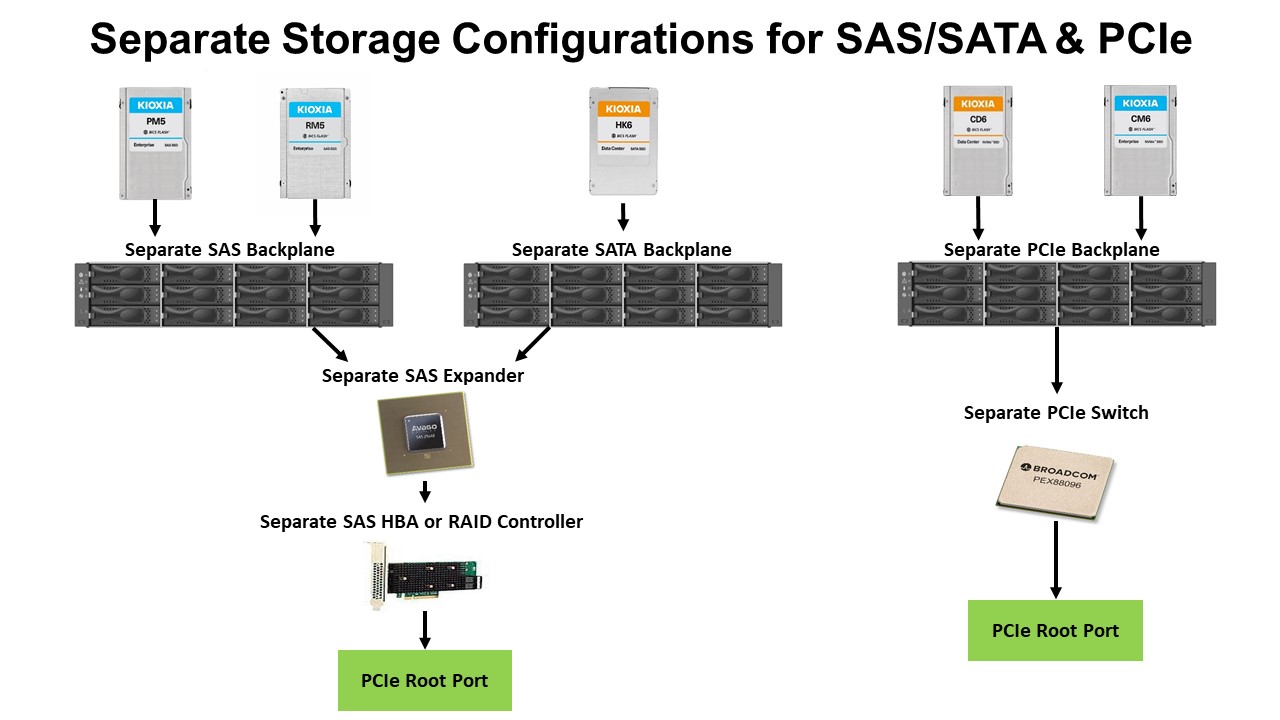

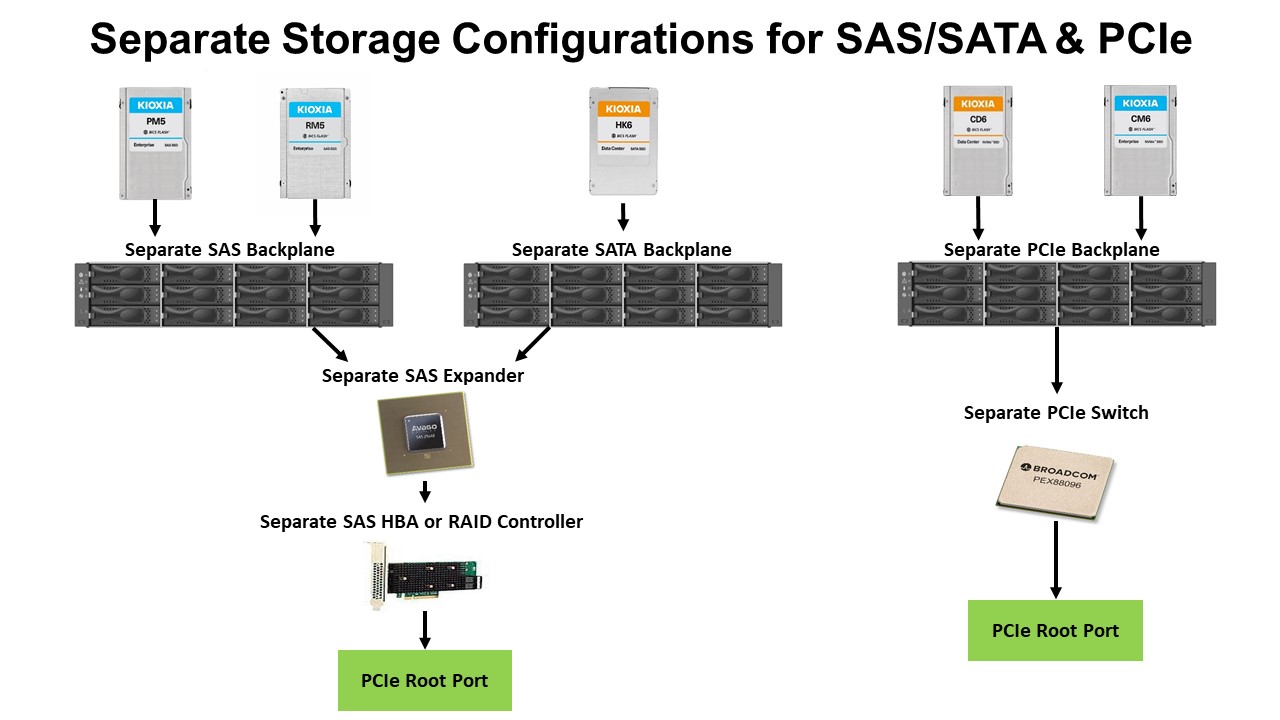

There's some issues though that have me wondering about compatibility between the 2 though since they both use the same 8639 connector. Now, in theory the backplane / controller should dictate which drives work with which controller.

Some cards/cages say they work with both versions while others say they don't.

So, this is similar to backwards compatibility of say a Gen4 drive being put into a Gen3 slot and just reducing the BW of the drive. However, U.2 won't work in a U.3 position even though the connector is the same. So, if someone wants to take advantage of this storage option it would be best to get a U.3 card / cage and drive. However the price difference is significant depending on the capacity.

8TB U.2 Gen3 - $400 - https://www.amazon.com/dp/B0C6B2PGMC/?tag=snbforums-20

8TB U.2 Gen4 - $500 - https://www.amazon.com/dp/B0B7Y4TFJR/?tag=snbforums-20

16TB U.2 Gen4 - $1000 - https://www.amazon.com/dp/B0C6BR89C2/?tag=snbforums-20

8TB U.3 Gen4 - $600 - https://www.amazon.com/dp/B0BHCMYH9Z/?tag=snbforums-20

16TB U.3 Gen4 - $1000 -https://www.amazon.com/dp/B0BHGSXB4T

U.2 PCIE adapters

$21 - https://www.amazon.com/dp/B099185SSV/?tag=snbforums-20

$40 - https://www.amazon.com/dp/B01D2PXUAQ/?tag=snbforums-20

U.3 PCIE adapters

$15 - https://www.amazon.com/dp/B0C3C6MHH3/?tag=snbforums-20

$16 - https://www.amazon.com/dp/B0C3HGSBGD/?tag=snbforums-20

$27 - https://www.amazon.com/dp/B0C8L6DPZX/?tag=snbforums-20

$42 - https://www.amazon.com/dp/B09RTZSN9X/?tag=snbforums-20

Cages

U.2/3 2X $230 - https://www.amazon.com/dp/B0BHLTDZZ8/?tag=snbforums-20

U.2/3 4X $400 - https://www.amazon.com/dp/B07B9HK4QG/?tag=snbforums-20

With how newer MOBO's are killing off slots to make room for more M2 options it's a toss up in which direction to go but, with a 4TB NVME Gen4 being ~$200 it's compelling to look at these U options. 8TB G4 ~$800 and no 16TB options in the M2 format.

4 x 4TB runs about $800 shipped

8TB x 2 U.2 same price but, in my instance would switch from R10 to R1

Bumping back to a 16TB array though would be a significant change in affordability at ~$2000 / R1.

But, considering the higher capacity being the same price as 8TB NVME M2 dives it's considerably more affordable at 50% of the cost for capacity.

www.storagereview.com

www.storagereview.com

www.snia.org

www.snia.org

https://www.amazon.com/s?k=Oculink+...1:qNKm69wi0NEUsBx0HFbdRxwkiYTmnI/9WpZ94SJ7bnA

Then there's Oculink SFF-8611 cards / cables / adapters where you can either use a PCIE slot or M2 to convert to U2/3 format as well.

I could swap the M2 drives for these @ $15/ea - https://www.amazon.com/dp/B0BDYKG9RQ/?tag=snbforums-20

Grab the cables - $29/ea https://www.amazon.com/dp/B07ZBKCFND/?tag=snbforums-20

$45/drive to convert over might be a budget issue though for the ability to up your storage game. High density MOBO options with Gen4 slots might be an issue though to find w/o going with the M2 conversion method. I've had some 5-6 slot options in the past though but, the consistency of the slot speeds / sizes / electrical wiring vary. Moving to something like a X299 though would give tons of slots all at Gen3 speeds.

It doesn't seem like a difficult proposition depending on how you want to do things. There's the PCIE card adapters and cages for 5.25" holes in the case.

There's some issues though that have me wondering about compatibility between the 2 though since they both use the same 8639 connector. Now, in theory the backplane / controller should dictate which drives work with which controller.

Some cards/cages say they work with both versions while others say they don't.

A U.3 NVMe drive is backward compatible to U.2 drive bays, but a U.2 NVMe drive can't be used in a U.3 drive bay

So, this is similar to backwards compatibility of say a Gen4 drive being put into a Gen3 slot and just reducing the BW of the drive. However, U.2 won't work in a U.3 position even though the connector is the same. So, if someone wants to take advantage of this storage option it would be best to get a U.3 card / cage and drive. However the price difference is significant depending on the capacity.

8TB U.2 Gen3 - $400 - https://www.amazon.com/dp/B0C6B2PGMC/?tag=snbforums-20

8TB U.2 Gen4 - $500 - https://www.amazon.com/dp/B0B7Y4TFJR/?tag=snbforums-20

16TB U.2 Gen4 - $1000 - https://www.amazon.com/dp/B0C6BR89C2/?tag=snbforums-20

8TB U.3 Gen4 - $600 - https://www.amazon.com/dp/B0BHCMYH9Z/?tag=snbforums-20

16TB U.3 Gen4 - $1000 -https://www.amazon.com/dp/B0BHGSXB4T

U.2 PCIE adapters

$21 - https://www.amazon.com/dp/B099185SSV/?tag=snbforums-20

$40 - https://www.amazon.com/dp/B01D2PXUAQ/?tag=snbforums-20

U.3 PCIE adapters

$15 - https://www.amazon.com/dp/B0C3C6MHH3/?tag=snbforums-20

$16 - https://www.amazon.com/dp/B0C3HGSBGD/?tag=snbforums-20

$27 - https://www.amazon.com/dp/B0C8L6DPZX/?tag=snbforums-20

$42 - https://www.amazon.com/dp/B09RTZSN9X/?tag=snbforums-20

Cages

U.2/3 2X $230 - https://www.amazon.com/dp/B0BHLTDZZ8/?tag=snbforums-20

U.2/3 4X $400 - https://www.amazon.com/dp/B07B9HK4QG/?tag=snbforums-20

With how newer MOBO's are killing off slots to make room for more M2 options it's a toss up in which direction to go but, with a 4TB NVME Gen4 being ~$200 it's compelling to look at these U options. 8TB G4 ~$800 and no 16TB options in the M2 format.

4 x 4TB runs about $800 shipped

8TB x 2 U.2 same price but, in my instance would switch from R10 to R1

Bumping back to a 16TB array though would be a significant change in affordability at ~$2000 / R1.

But, considering the higher capacity being the same price as 8TB NVME M2 dives it's considerably more affordable at 50% of the cost for capacity.

Evolving Storage with SFF-TA-1001 (U.3) Universal Drive Bays

U.3 is a term that refers to compliance with the SFF-TA-1001 specification, which also requires compliance with the SFF-8639 Module specification.

SSD Form Factors | SNIA

Updated March 2023 Solid-state drives (SSDs) are commonly used in client, hyperscale and enterprise compute environments. They typically come in three flavors: NVMe®, SAS, and SATA. Since SSDs are made from flash memory, they can be built in many different form factors. This resource guide is...

https://www.amazon.com/s?k=Oculink+...1:qNKm69wi0NEUsBx0HFbdRxwkiYTmnI/9WpZ94SJ7bnA

Then there's Oculink SFF-8611 cards / cables / adapters where you can either use a PCIE slot or M2 to convert to U2/3 format as well.

I could swap the M2 drives for these @ $15/ea - https://www.amazon.com/dp/B0BDYKG9RQ/?tag=snbforums-20

Grab the cables - $29/ea https://www.amazon.com/dp/B07ZBKCFND/?tag=snbforums-20

$45/drive to convert over might be a budget issue though for the ability to up your storage game. High density MOBO options with Gen4 slots might be an issue though to find w/o going with the M2 conversion method. I've had some 5-6 slot options in the past though but, the consistency of the slot speeds / sizes / electrical wiring vary. Moving to something like a X299 though would give tons of slots all at Gen3 speeds.