Realize that when sending data out a NIC there are two speeds, MAXbps and 0bps. QoS here is more of a prioritization scheme. Three devices want to send a packet out a NIC at the same, packet with highest QoS level will leave first.

Say a device with a low QoS level has multiple packets to send and begins sending. A device with a higher QoS setting has a packet to send will have to wait until the lower QoS device finishes sending the packet currently being sent.

More expensive/sophisticated routers can actually be configured to send data at a specific rate but that is only the effective rate. The actual rate is still full speed. It done with smoke and mirrors.

In reality, QoS has many methodologies, each with its own uniqueness and limitations.

I don't think that is correct.

Packets go in a queue and enter a hierarchical token system. This system de-queues packets according to user set priority. Eg high priority packets are de-queued before low priorty packets.

Additionally, the token system is also configurable to dequeue packets at a set rate as to fit within user defined rates (eg bandwidth limit).

--

The underlying method used to achieve this is kind of smoke and mirrors but it should work and work well!

You are correct, packets initially are able to enter the download stream at your bandwidth rate but the QOS system works well to tame the speeds into user configurable rates.

Incomming bandwidth limiting is not an issue when TCP streams. The TCP protocol has the sending server negotiate transfer speed based on how long that server waited for a returned awk messages. TCP also has an ECN flag feature so it can slow down traffic even faster rather than evaluating awk delays. TCP also has a slow ramp to full bandwidth instead of saturating the link right away. This designed behavior fits will into how QOS is treating these packets behind the scenes as QOS will both increase these delays or drop packets so the sending server will in the end send at a rate that the receiving user desires.

UDP limiting is a different story.

Your explanation isn't entirely inaccurate but it sure could use some updating, since limiting should be working!!

--

The end result with QOS is that queued traffic will be enforced to meet user set limits by either being queued or dropped if the exceeds the queue length.

The user should always receive packets at their desired rate, even if the actual link transfer rate is higher since the flagged packets should queued (held in buffers) or dropped instead of being pass through the user!

--

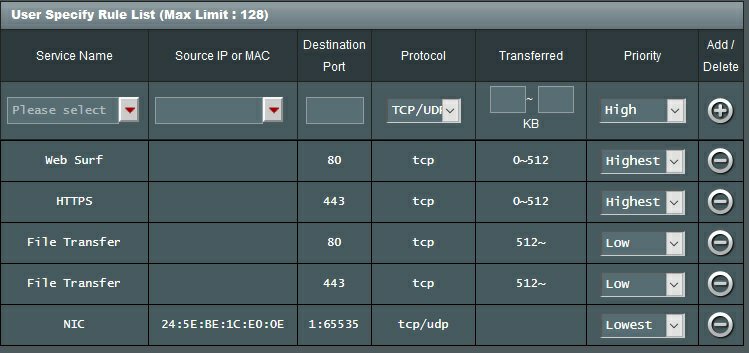

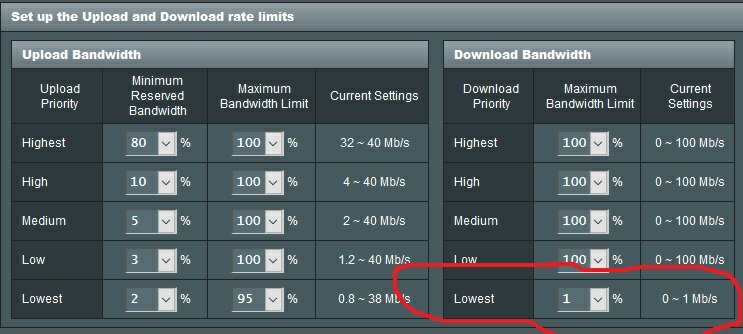

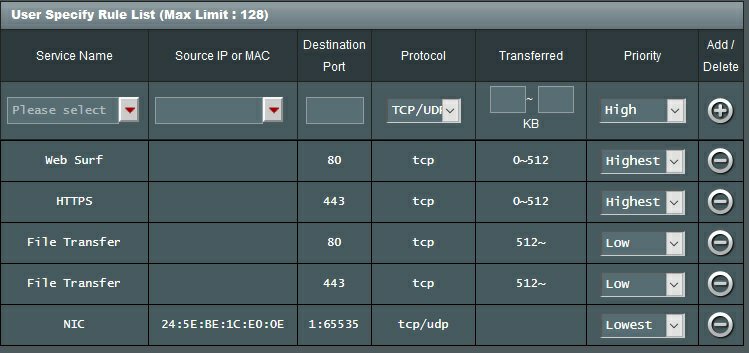

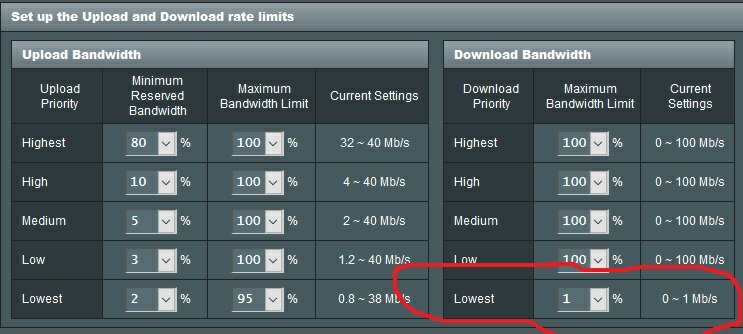

Something is not working right with the above QOS setup since that action is not happening.

Send the output of

It could be a UI glitch where you are setting "Minimum Reserved Bandwidth" instead of what is labeled as "Maximum Available Bandwidth"

(upload category has both settings, but the download category only has one setting)