First of all, greetings and kindest regards to Merlin. Thank you for your work.

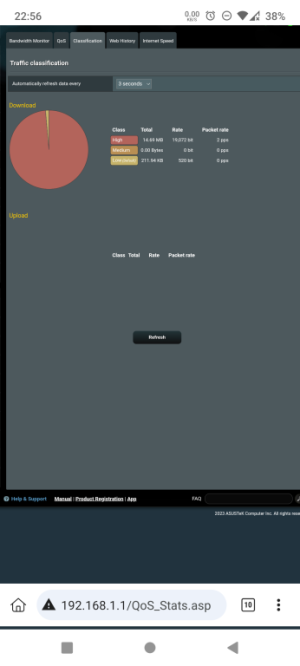

Now, onto the question at hand. I seem to have a small issue with traffic classification on the Adaptive QOS page ( the one with the pie chart ).

Sometimes, the page shows the pie chart with nothing under it ( as seen on the attached picture ), and sometimes ( under the pie chart ) it shows a list of connected clients, used ports and untracked traffic... then, after a few seconds, that list promptly disapears and I can not, for the life of me, get it back. Quite random. Only the lonely pie chart remains, ever vigilant.

Problem is, I would like that list back, or at least, to be shown the entire time under the pie chart where it proudly belongs.

Can someone help?

Router is AX86U Pro, latest Merlin firmware release ( 3004_388.5 ).

Thank you.

Now, onto the question at hand. I seem to have a small issue with traffic classification on the Adaptive QOS page ( the one with the pie chart ).

Sometimes, the page shows the pie chart with nothing under it ( as seen on the attached picture ), and sometimes ( under the pie chart ) it shows a list of connected clients, used ports and untracked traffic... then, after a few seconds, that list promptly disapears and I can not, for the life of me, get it back. Quite random. Only the lonely pie chart remains, ever vigilant.

Problem is, I would like that list back, or at least, to be shown the entire time under the pie chart where it proudly belongs.

Can someone help?

Router is AX86U Pro, latest Merlin firmware release ( 3004_388.5 ).

Thank you.