First, sorry about the typo, obviously that should be a P35 motherboard, not P53!

Currently I'm using the onboard network cards of both motherboards. My home PC's motherboard is a Gigabyte GA-965P DQ6 and the motherboard of my windows home server is a Gigabyte GA-G33M-DS2R. I have two Intel PCI-E NIC's on order currently, because I believe I'll achieve even better speeds with these network cards than with the onboard ones.

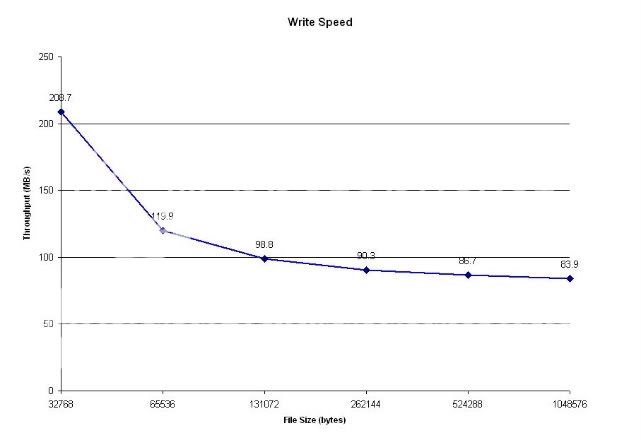

I've only measured the writing speeds of my server for now... I've done this by copying all the ISO files (several hundreds) of my DVD's (at least 4 GB a piece) that were still on my PC to my WHS. I did this with total commander, because this piece of software actually shows the copy speed in MB/s contrary to windows explorer. Not very scientific, I know, but a nice indication nonetheless.

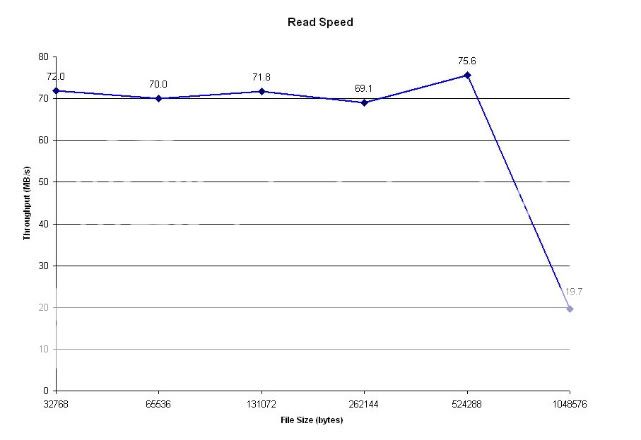

I've not tried copying something back from the server to my PC. I'll do that tonight if you're interested?

Currently I'm using the onboard network cards of both motherboards. My home PC's motherboard is a Gigabyte GA-965P DQ6 and the motherboard of my windows home server is a Gigabyte GA-G33M-DS2R. I have two Intel PCI-E NIC's on order currently, because I believe I'll achieve even better speeds with these network cards than with the onboard ones.

I've only measured the writing speeds of my server for now... I've done this by copying all the ISO files (several hundreds) of my DVD's (at least 4 GB a piece) that were still on my PC to my WHS. I did this with total commander, because this piece of software actually shows the copy speed in MB/s contrary to windows explorer. Not very scientific, I know, but a nice indication nonetheless.

I've not tried copying something back from the server to my PC. I'll do that tonight if you're interested?

Last edited: