Dennis Wood

Senior Member

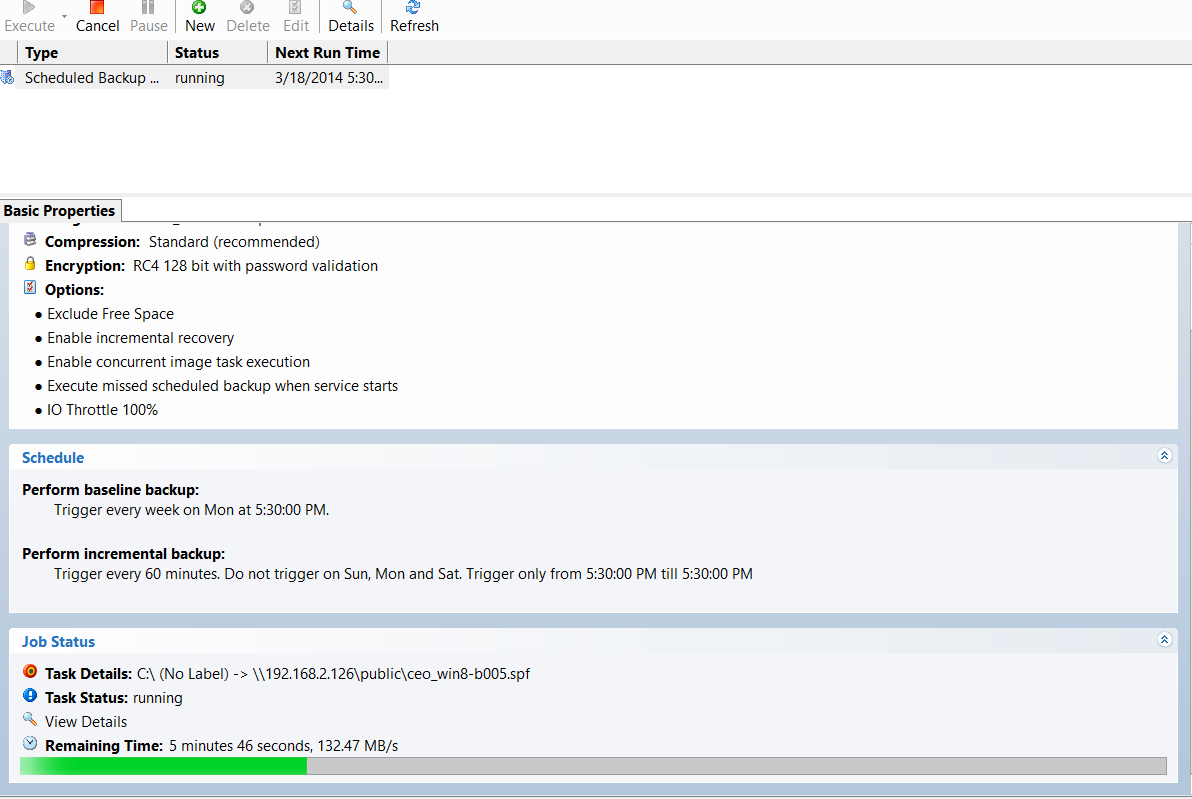

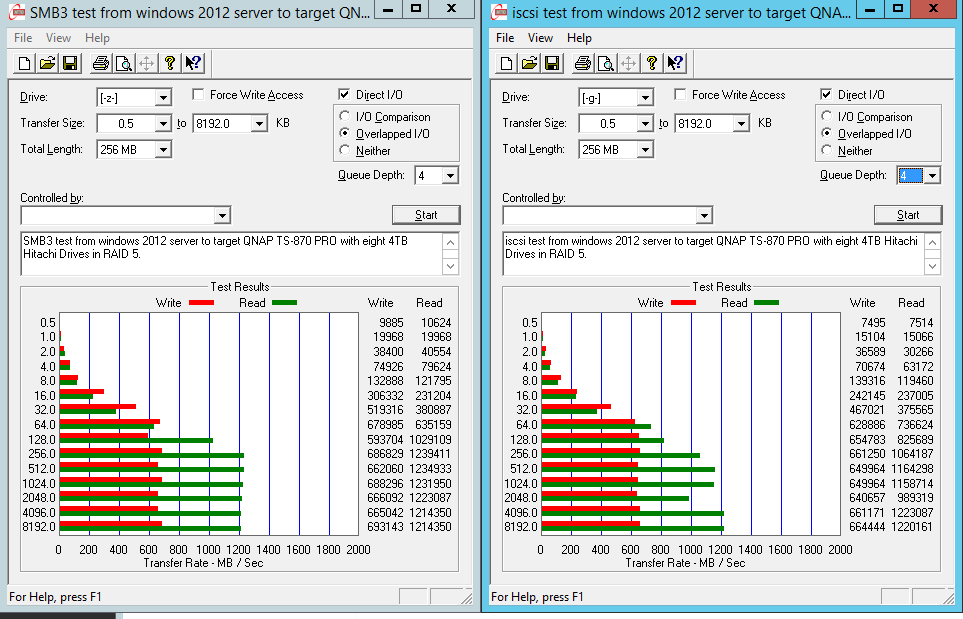

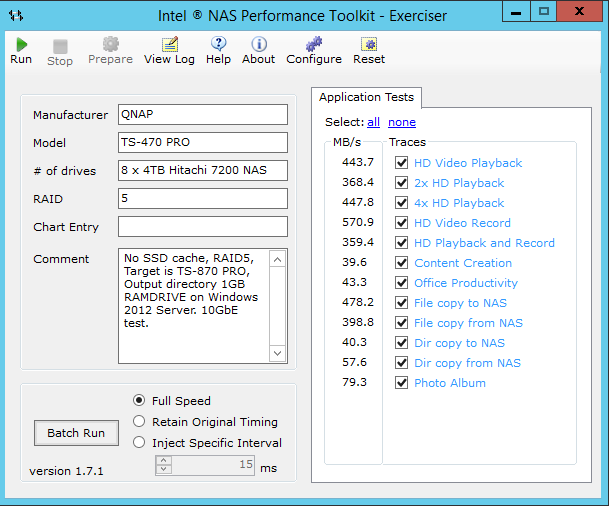

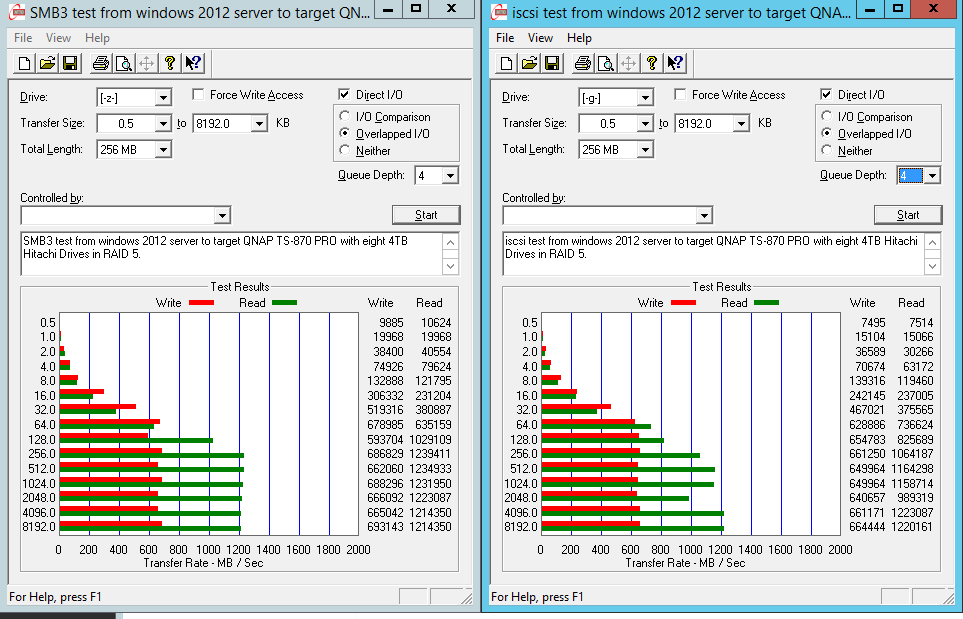

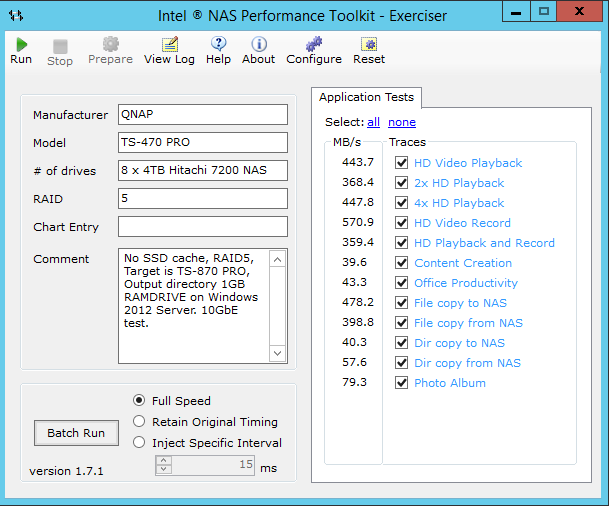

Here are a few results using the QNAP TS-870 Pro in RAID5 mode with 8 x 4TB Hitachi 7200 RPM drives (about 28TB usable). Note that queue depth in the ATTO tests is 4, however a single file/copy would not result in 1200MB/s from the NAS..it's more in the 700MB/s area. The ATTO tests show iscsi results as well as SMB3 (standard file share). Jumbo frames are enabled on both NAS and Windows server 2012 test machine. RAM was limited to 2GB in the NASPT tests, so those results are accurate.

RAID 5 write results are better than I expected, demonstrating speeds that likely rival the RocketRaid 2720 RAID card in the windows server. The setup that we end up will include both a Windows 2012 Server, as well as the TS-870 PRO, connected to our design/video/graphics 10GbE workstations via Netgear's 8 port switch.

In RAID zero with 7 drives, write speeds were just about 800MB/s so the hit for parity in RAID5 is suprisingly low. The reason you see a plateau at 1200MB/s in the ATTO tests is because that's the limit over a single 10GbE connection. This correlates nicely with the NTTTCP tests on windows clients where I'm seeing 1180MB/s max.

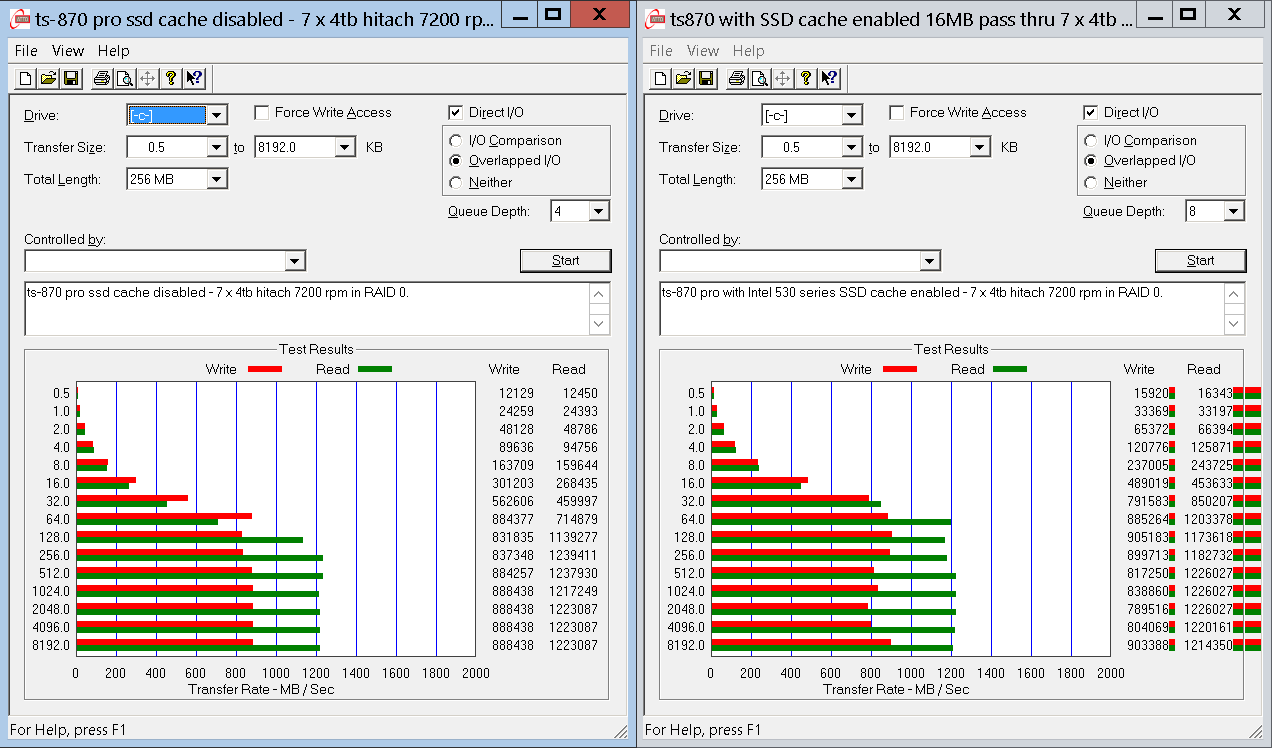

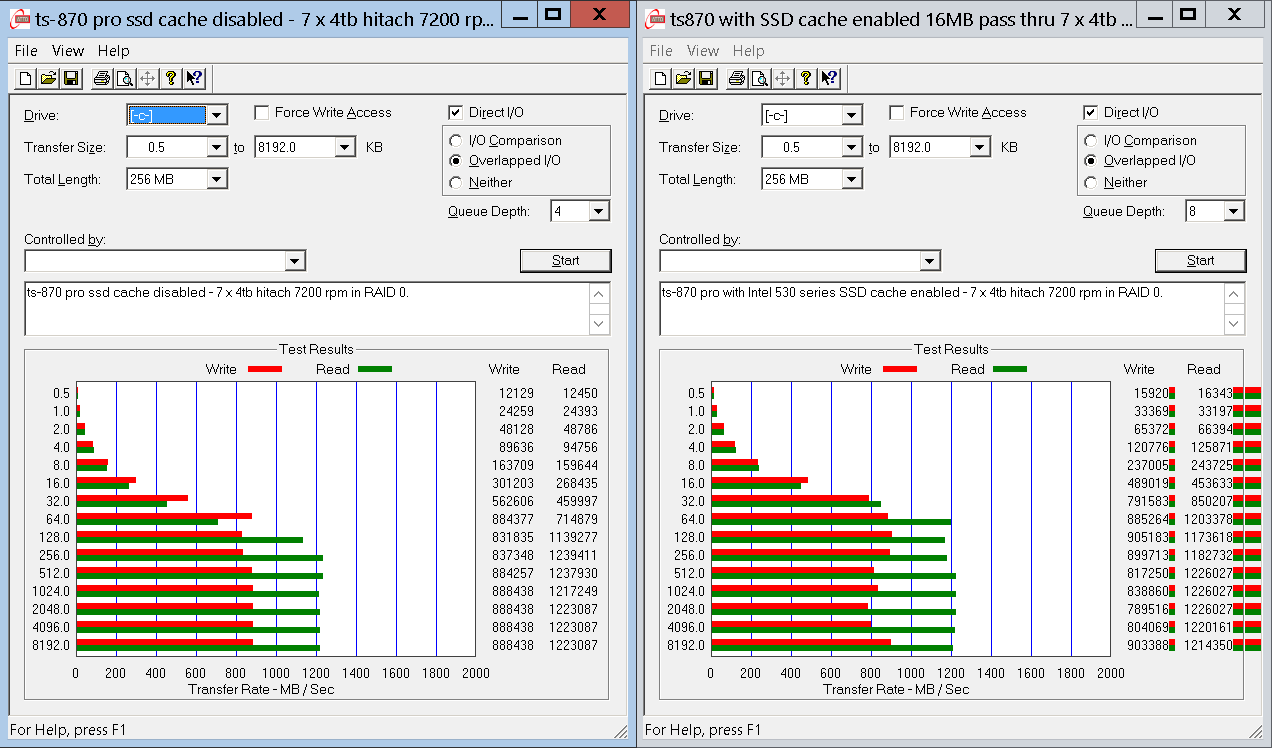

Curious to see how SSD caching might affect results, I set up a 7 disk RAID 0 array, and tested with and without SSD cache enabled. There is an option in the SSD cache interface to bypass the cache for files larger than 16MB, which I toggled on. The results show this effect.

RAID 5 write results are better than I expected, demonstrating speeds that likely rival the RocketRaid 2720 RAID card in the windows server. The setup that we end up will include both a Windows 2012 Server, as well as the TS-870 PRO, connected to our design/video/graphics 10GbE workstations via Netgear's 8 port switch.

In RAID zero with 7 drives, write speeds were just about 800MB/s so the hit for parity in RAID5 is suprisingly low. The reason you see a plateau at 1200MB/s in the ATTO tests is because that's the limit over a single 10GbE connection. This correlates nicely with the NTTTCP tests on windows clients where I'm seeing 1180MB/s max.

Curious to see how SSD caching might affect results, I set up a 7 disk RAID 0 array, and tested with and without SSD cache enabled. There is an option in the SSD cache interface to bypass the cache for files larger than 16MB, which I toggled on. The results show this effect.

Last edited: