Dennis Wood

Senior Member

After "Azazel" posted some information in this thread ( http://forums.smallnetbuilder.com/showthread.php?t=15062) regarding SMB3 multichannel performance, my curiosity was piqued. After a bit of reading, it was immediately apparent that something super cool is hiding under the hood of windows 8.1 and 2012 server.

Server Message Block (SMB) protocol operates as an application layer network protocol, for providing shared access to serial ports, printers and files. It has been around for awhile, where Vista and Windows 7 brought SMB2, and now SMB3 is part of Windows 8.1 and 2012 server. There are a lot of performance enhancements in SMB3.

You may or may not know that link aggregation (teaming multiple NIC cards together) does nothing to speed up single connections, and requires LACP switches, NIC teaming drivers etc. So for any home user etc, a waste of time really.

However with Windows 8.1 running with a RAID array as a NAS, you can simply plug two (or more) NIC ports from a dual lan card into your switch, plug two cables (or more) similarly from a workstation, and instantly double your bandwidth with zero configuration. It just works compliments of SMB3.

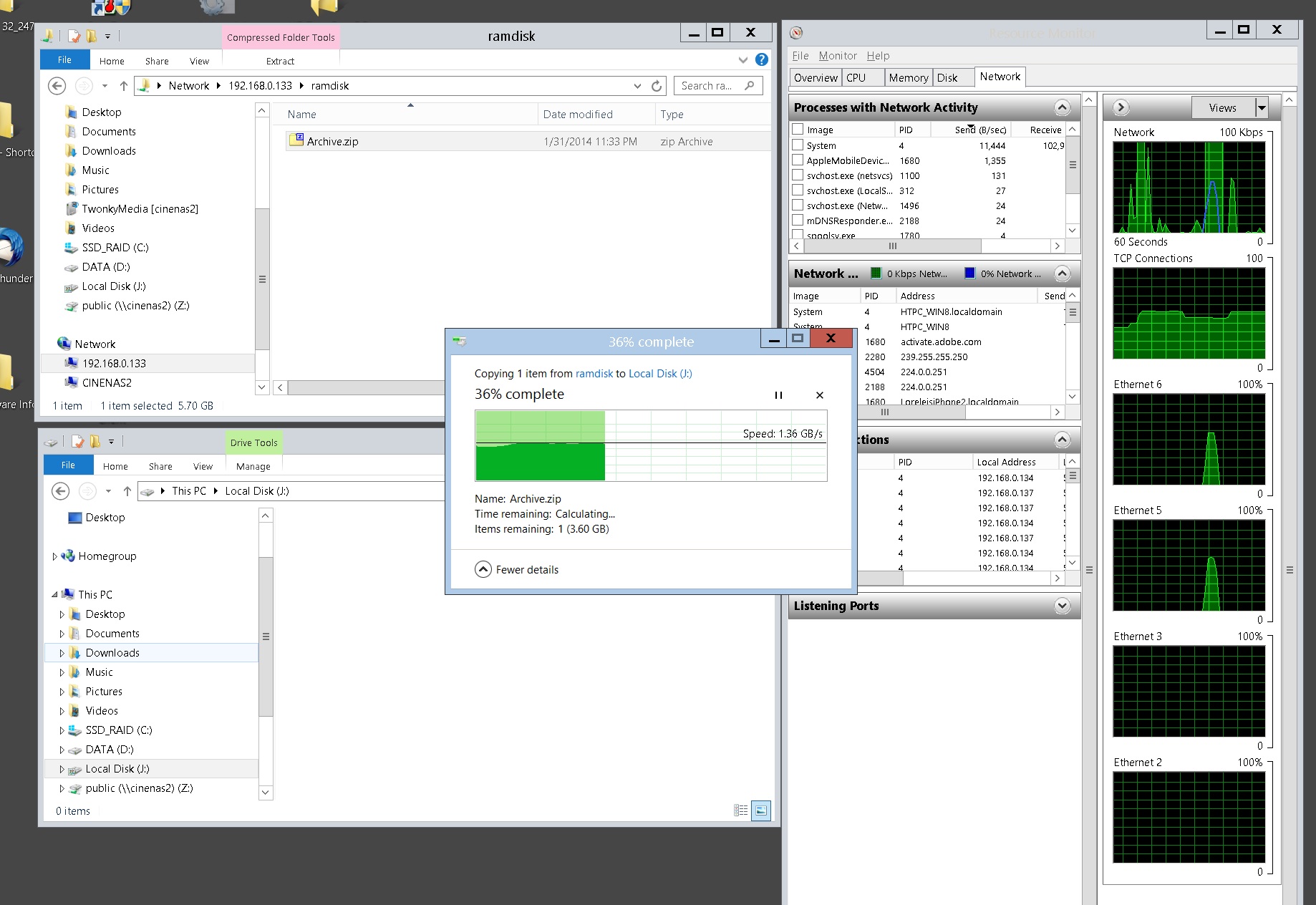

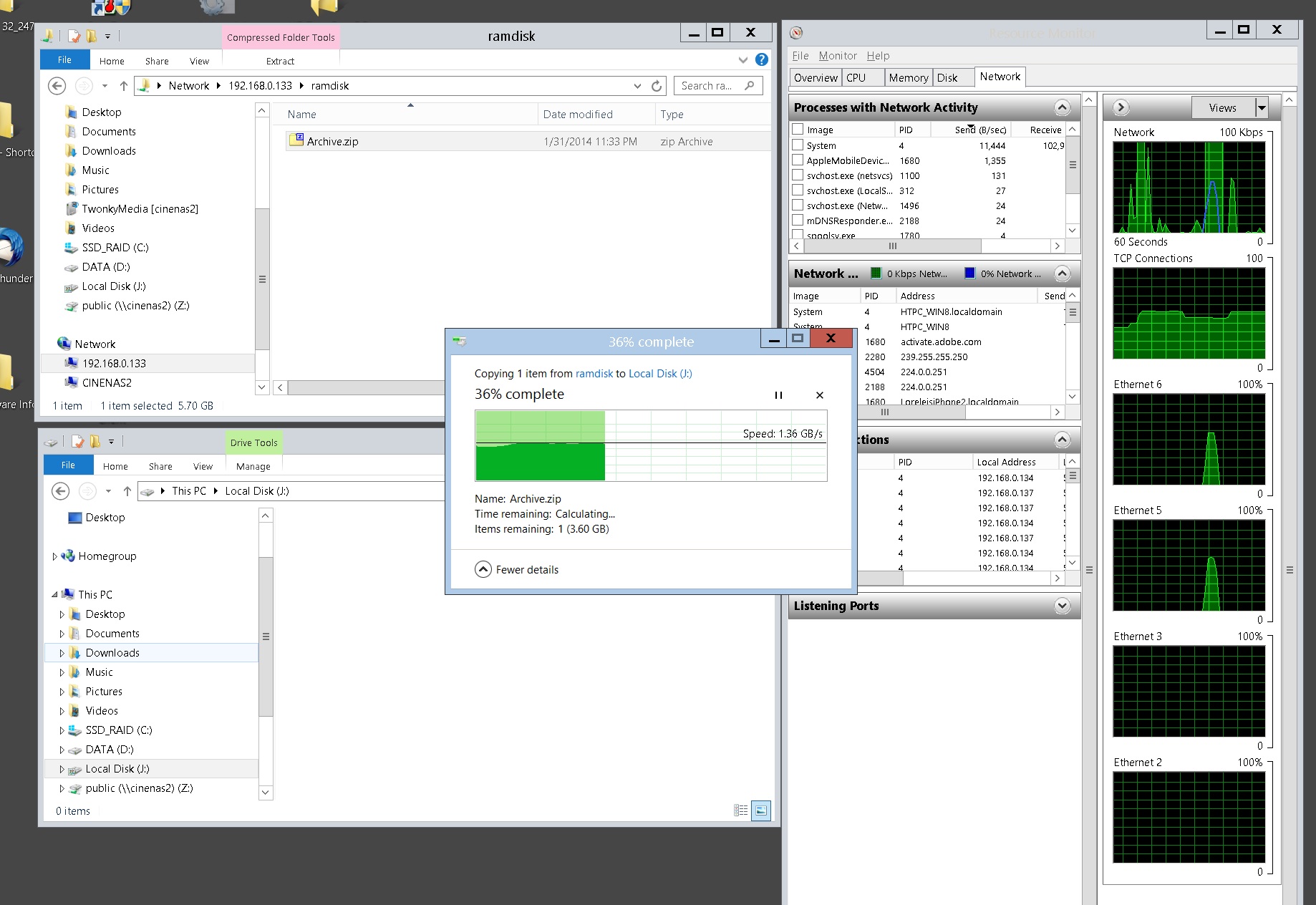

So how does 1360 MB/s transfer between two windows workstations sound? This is pretty crazy performance, and in this example is being limited by ramdrive software. I've tried IMDISK and now Softperfect RAmdisk to observer a 200MB/s difference. If you look at the Ethernet 5 and 6 graph, you can see that each 10GBe port is only at 60% saturation. So ~2300 MB/s is likely doable, with a fast enough system. Mine isn't! For an incredibly cost effective shared media server for RAW video and photos (my goal in this project), this is off the shelf hardware. I ran the same test with dual Gigabit cards and got an instant 235 MB/s result with zero config on the switch or NIC drivers. In other words, if you have dual lan workstations, simply upgrading to Windows 8.1 can double your network bandwidth with zero hardware investments and no configuration.

Server Message Block (SMB) protocol operates as an application layer network protocol, for providing shared access to serial ports, printers and files. It has been around for awhile, where Vista and Windows 7 brought SMB2, and now SMB3 is part of Windows 8.1 and 2012 server. There are a lot of performance enhancements in SMB3.

You may or may not know that link aggregation (teaming multiple NIC cards together) does nothing to speed up single connections, and requires LACP switches, NIC teaming drivers etc. So for any home user etc, a waste of time really.

However with Windows 8.1 running with a RAID array as a NAS, you can simply plug two (or more) NIC ports from a dual lan card into your switch, plug two cables (or more) similarly from a workstation, and instantly double your bandwidth with zero configuration. It just works compliments of SMB3.

So how does 1360 MB/s transfer between two windows workstations sound? This is pretty crazy performance, and in this example is being limited by ramdrive software. I've tried IMDISK and now Softperfect RAmdisk to observer a 200MB/s difference. If you look at the Ethernet 5 and 6 graph, you can see that each 10GBe port is only at 60% saturation. So ~2300 MB/s is likely doable, with a fast enough system. Mine isn't! For an incredibly cost effective shared media server for RAW video and photos (my goal in this project), this is off the shelf hardware. I ran the same test with dual Gigabit cards and got an instant 235 MB/s result with zero config on the switch or NIC drivers. In other words, if you have dual lan workstations, simply upgrading to Windows 8.1 can double your network bandwidth with zero hardware investments and no configuration.

Last edited: