Okay, LOTS of updates on this. I want to add this information to the ss4200 wiki found here, but I don't have access:

http://ss4200.pbworks.com/w/page/5122751/FrontPage

So instead I will put it all in this thread.

I purchased what used to be the Legend Micro version of the ss4200-e, which was a converted from a Fujitsu branded ss4200-ewh using an ide dom and the emc software. This unit works identically to my Intel ss4200-e, except that there are no references to Intel, just Emc. The firmware revision is the exact same and both work in the same manner. I'm using the Fujitsu box for these tests and using the Intel one for reference.

First, some base information that helped me get started. Enabling ssh and using putty to login into the linux system at the heart of the unit helps tremendously to poke around in the system and figure out what it is doing on a granular level.

To enable ssh access, there is a hidden 'support' page at:

http://NAS-IP/support.html

You can enable it there. Once you have enabled it, you can log into the system using putty with the login/password of root/sohoADMIN_PASSWORD. If there is no admin password (like on my Fujitsu), you can simply use soho for the password. This is a very high level access and you can break your box permanently as well as lose your data if you mess things up so tread lightly!

Tried a usb nic using a asix ax88772B based nic and confirmed it didn't work because the drivers weren't loaded. The 'lsusb' command shows all the attached usb devices and 'ifconfig -a' will show all the interfaces. I also checked 'ifmod' to see if there was any additional modules loaded and there didn't seem like there was, so I gave up at this point. Someone more knowledgeable than me in unix could probably quickly figure out how to add the driver and get the usb nic working. (Please post if you know how as I might try it.)

So with no driver for another nic, I started researching what the driver is that supports the native nic and what other nics that driver supports. The idea being to add a second nic to the x1 pcie slot.

I saw 'e100' and 'e1000' as loaded modules via the 'lsmod' command. These are driver packages for Intel cards, and work for at least the following nics according to the docs I found at

https://downloadmirror.intel.com/5154/ENG/e100.htm and

https://downloadmirror.intel.com/20927/eng/e1000.htm:

Code:

82558 PRO/100+ PCI Adapter 668081-xxx, 689661-xxx

82558 PRO/100+ Management Adapter 691334-xxx, 701738-xxx, 721383-xxx

82558 PRO/100+ Dual Port Server Adapter 714303-xxx, 711269-xxx, A28276-xxx

82558 PRO/100+ PCI Server Adapter 710550-xxx

82550

82559

PRO/100 S Server Adapter 752438-xxx (82550)

A56831-xxx, A10563-xxx, A12171-xxx, A12321-xxx, A12320-xxx, A12170-xxx

748568-xxx, 748565-xxx (82559)

82550

82559

PRO/100 S Desktop Adapter 751767-xxx (82550)

748592-xxx, A12167-xxx, A12318-xxx, A12317-xxx, A12165-xxx

748569-xxx (82559)

82559 PRO/100+ Server Adapter 729757-xxx

82559 PRO/100 S Management Adapter 748566-xxx, 748564-xxx

82550 PRO/100 S Dual Port Server Adapter A56831-xxx

82551 PRO/100 M Desktop Adapter A80897-xxx

PRO/100 S Advanced Management Adapter 747842-xxx, 745171-xxx

CNR PRO/100 VE Desktop Adapter A10386-xxx, A10725-xxx, A23801-xxx, A19716-xxx

PRO/100 VM Desktop Adapter A14323-xxx, A19725-xxx, A23801-xxx, A22220-xxx, A23796-xx

Code:

Intel® PRO/1000 PT Server Adapter

Intel® PRO/1000 PT Desktop Adapter

Intel® PRO/1000 PT Network Connection

Intel® PRO/1000 PT Dual Port Server Adapter

Intel® PRO/1000 PT Dual Port Network Connection

Intel® PRO/1000 PF Server Adapter

Intel® PRO/1000 PF Network Connection

Intel® PRO/1000 PF Dual Port Server Adapter

Intel® PRO/1000 PB Server Connection

Intel® PRO/1000 PL Network Connection

Intel® PRO/1000 EB Network Connection with I/O Acceleration

Intel® PRO/1000 EB Backplane Connection with I/O Acceleration

Intel® PRO/1000 PT Quad Port Server Adapter

Intel® PRO/1000 PF Quad Port Server Adapter

Intel® 82566DM-2 Gigabit Network Connection

Intel® Gigabit PT Quad Port Server ExpressModule

Intel® PRO/1000 Gigabit Server Adapter

Intel® PRO/1000 PM Network Connection

Intel® 82562V 10/100 Network Connection

Intel® 82566DM Gigabit Network Connection

Intel® 82566DC Gigabit Network Connection

Intel® 82566MM Gigabit Network Connection

Intel® 82566MC Gigabit Network Connection

Intel® 82562GT 10/100 Network Connection

Intel® 82562G 10/100 Network Connection

Intel® 82566DC-2 Gigabit Network Connection

Intel® 82562V-2 10/100 Network Connection

Intel® 82562G-2 10/100 Network Connection

Intel® 82562GT-2 10/100 Network Connection

Intel® 82578DM Gigabit Network Connection

Intel® 82577LM Gigabit Network Connection

Intel® PRO/1000 Gigabit Server Adapter

Intel® PRO/1000 PM Network Connection

Intel® 82562V 10/100 Network Connection

Intel® 82566DM Gigabit Network Connection

Intel® 82566DC Gigabit Network Connection

Intel® 82566MM Gigabit Network Connection

Intel® 82566MC Gigabit Network Connection

Intel® 82562GT 10/100 Network Connection

Intel® 82562G 10/100 Network Connection

Intel® 82566DC-2 Gigabit Network Connection

Intel® 82562V-2 10/100 Network Connection

Intel® 82562G-2 10/100 Network Connection

Intel® 82562GT-2 10/100 Network Connection

Intel® 82578DC Gigabit Network Connection

Intel® 82577LC Gigabit Network Connection

Intel® 82567V-3 Gigabit Network Connection

I have a Dell 0u3867 Intel Pro/1000 PT pcie server adapter, which matched a supported nic on the list so that was the testbed. The idea being that if this card is installed in the system, it should recognize it, and depending on the extent of the software accommodating 2 nics, it might 'just work' after installation.

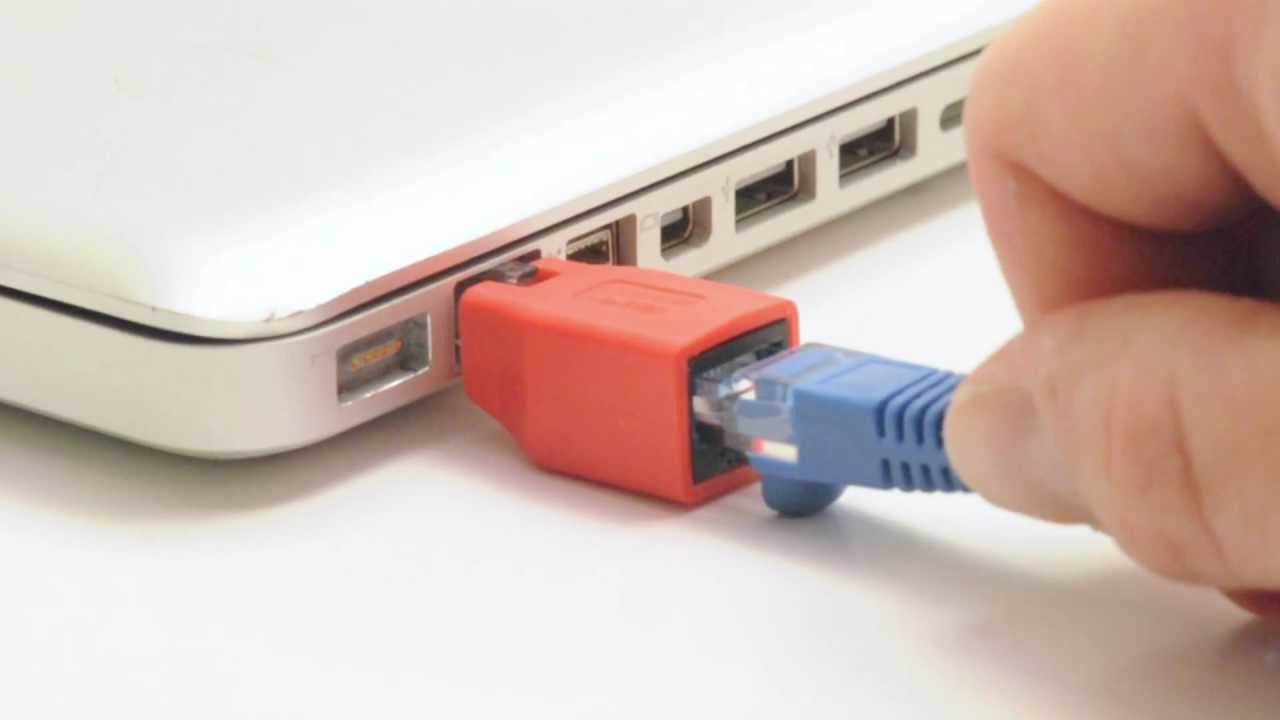

To run this test, the system cover had to be off and drives 3 and 4 could not be installed. (I had set up a 1tb mirrored configuration using drives in 1 and 2 just for this scenario.) The drive cages for 3 and 4 need to be flipped over onto 1 and 2 for there to be enough vertical room for the nic. It will not be able to run permanently this way and will require a pcie x1 relocation cable in order to mount the nic elsewhere. Also, the retaining bracket for the nic needed to be removed. Because of the tight test fit, I also needed to plug in the ethernet cable into the nic before installing the nic in the x1 slot, else I would not be able to plug in the cable. I had to remind myself to make sure the system was completely unplugged while doing this.

The second nic's lights came alive shortly after the first, so I knew it was at least powered. Once the system booted successfully (like normal), I checked 'ifconfig -a' to see an eth1 was now on the list, but that it was not listed as an active interface. I can't remember exactly which ifconfig commands I ran now (it was late), but I was able to bring the interface up and assign it a static ip. Using this static ip, I could now ping the box on both the native nic as well as my second nic.

After logging into the web interface on both nics on different browsers, I noticed the cpu temperature nearing 100F. Comparing it to my other ss4200 that was at 60F, it was obvious that leaving the cover open was not allowing proper airflow. I turned on a ceiling fan in the room where the test unit was and temps came down about 10F. The rear fans did not speed up at all even though temps elevated. This is why I hate pwm fan designs, but in this system it normally does a great job of keeping quiet while adequately cooling, which will be important for the environment this will finally be deployed in several thousand miles away at the end of a ipsec vpn tunnel as a near-time off-site backup.

With both interfaces working, I proceeded to examine if the second interface helps performance. In a nutshell, it doesn't as I was able to get the same performance from hammering the native nic with multiple sessions as I did from splitting the tests between both nics. I believe the limits on speed are possibly the cpu (although I never saw this above 30% on the 'top' command), memory (which can be upgraded to 1gb, but is limited by the software to that maximum), and drive speed. It would be interesting to see what modern day ssds that can transfer in excess of 500Mb/s would do in this unit, but alas I have no such drives to do such testing.