Quad9 News/blog

Quad9 public domain name service moves to Switzerland for maximum internet privacy protection

Quad9 public domain name service moves to Switzerland for maximum internet privacy protection

From what ive heard this site checks linux version of the server

So not what you KNOW, just internet prattle.

The SADDNS test page warns you that the test is not necessarily accurate. There are also warnings from other researchers that the results for DNS servers checks are also cached for long periods which gives false results.

Quad9 and many other providers use DNSSEC which goes a long way to preventing SAD DNS issues and all the information released is a proof of concept so you need to stop panicking .

Just because you don't like or understand the reply you received does not make Quad9 "bad".

Can you give a specific example of Quad9 being slow to react (or "bad at reacting") to new things? The SAD thing isn't in fact an example of that, since it's not something we were vulnerable to. We were the first recursive resolver to implement DNSSEC. We were the first recursive resolver to be GDPR compliant. We were the first recursive resolver to implement standards-based encryption. We were the first recursive resolver to integrate multi-source threat intelligence for malware blocking. We're the first recursive resolver to relocate to a jurisdiction which doesn't have national security gag orders. We're the first recursive resolver to not be subject to intelligence or law enforcement data collection requirements. We're the first recursive resolver to have a human rights policy. And this week we just committed to publish our negative trust anchor list, called for others to do the same, and began the work to establish an open process for a unified NTA list to be publicly managed.Where do you see panic and i said that quad9 is bad at reacting to new things not that its bad overall so you lie here (i pointed out good/bad sides). The only dns server that reacted quickly and scored higher than quad9 is nextdns thats also true. Even quad9 themself replied they are investigating the problem and not its already "fixed". You should better stop spreading lies and misinformation and focus on facts instead.

Can you give a specific example of Quad9 being slow to react (or "bad at reacting") to new things? The SAD thing isn't in fact an example of that, since it's not something we were vulnerable to. We were the first recursive resolver to implement DNSSEC. We were the first recursive resolver to be GDPR compliant. We were the first recursive resolver to implement standards-based encryption. We were the first recursive resolver to integrate multi-source threat intelligence for malware blocking. We're the first recursive resolver to relocate to a jurisdiction which doesn't have national security gag orders. We're the first recursive resolver to not be subject to intelligence or law enforcement data collection requirements. We're the first recursive resolver to have a human rights policy. And this week we just committed to publish our negative trust anchor list, called for others to do the same, and began the work to establish an open process for a unified NTA list to be publicly managed.

If there are ways we can improve, we're all ears. It's how we continue making things better.

I'm not clear on what you're looking for, exactly. Do you want us to work with the developer of the test to try to help them to improve their test until it's accurate? That doesn't scale, and doesn't provide users with any benefit, so that's really not where our effort is directed.it still looks that quad9 fails the saddns.net test. My comment about quad9 being slow to react is based exclusively on sad dns vulnerability. I understand points about test not being accurate. So scoring high on tests and being free is the biggest advantage of quad9 in my opinion but reacting to this proof of concept vulnerability is way too long in my opinion.

I'm not clear on what you're looking for, exactly. Do you want us to work with the developer of the test to try to help them to improve their test until it's accurate? That doesn't scale, and doesn't provide users with any benefit, so that's really not where our effort is directed.

"Scoring high on tests" isn't a goal for us. We work to protect users.

You say you want us to "react quickly" but you don't specify what that reaction would be.

Can you detail the chain of events that you're imagining would happen, in a perfect world?

So not what you KNOW, just internet prattle.

The SADDNS test page warns you that the test is not necessarily accurate. There are also warnings from other researchers that the results for DNS servers checks are also cached for long periods which gives false results.

Quad9 and many other providers use DNSSEC which goes a long way to preventing SAD DNS issues and all the information released is a proof of concept so you need to stop panicking .

You should better stop spreading lies and misinformation and focus on facts instead

but reacting to this proof of concept vulnerability is way too long in my opinion.

How do they react to something Quad9 isn't vulnerable to?

The way I see it, this is a bug with the SADDNS site, not with Quad9. It's up to that test site to fix their test, Quad9 cannot fix it for them.

Just like Cloudflare's DoT+DNSSEC test site has been broken for nearly a year, and no amount of poking at them got them to fix it. The best we managed to do (as the community) was to get them to acknowledge that it was broken (as they generate temporary URLs that break DNSSEC validation).

You are barking at the wrong tree here...

If you state that they fixed it already then maybe ill switch back to it from paid dns that is nextdns.

Your DNS server IP is 74.63.28.246

Since it blocks outgoing ICMP packets, your DNS server is not vulnerable.

The test currently only takes the side channel port scanning vulnerability into consideration. A successful attack may also require other features in the server (e.g., supporting cache).

The test is conducted on 2021-03-04 14:30:05.122875679 UTC

Disclaimer: This test is not 100% accurate and is for test purposes only.Quad9 was never vulnerable to SADDNS , there was nothing to fix.

Why bother looking ? Quad9 staff have told you that Q9 was never vulnerable to this "attack"Sadly just tested quad9 and saddns still reports as vulnerable

The test currently only takes the side channel port scanning vulnerability into consideration. A successful attack may also require other features in the server (e.g., supporting cache).

thehackernews.com

thehackernews.com

We've been following the issue closely... In general, we're stretched very thin, so we have to be very careful about taking on a larger scope of issues than we can see our way to successfully solving. We view our mandate as a balance of security, privacy, and performance. And we work as much by trying to establish new minimum-acceptable-levels-of-service as by doing everything ourselves. We don't have the resources to protect the whole world directly, and that would be an inefficient way to go about things anyway. Plus it would centralize control, which is an anti-goal. So we try to very pointedly do limited things better, such that the monetizers have to also raise their standards somewhat in order to remain in the running.

Our blocking has been limited to fairly narrowly-construed security threats, thus far. Malware, phishing, C&C, homonyms and typo-squatting. By focusing on that, we've been able to achieve a 98% protection rate, in an industry which previously considered 10% to be good. Consequently, others have picked up their game as well, and we're now starting to see a few others that are consistently blocking the majority of threats, as well. So, people are more secure now.

~

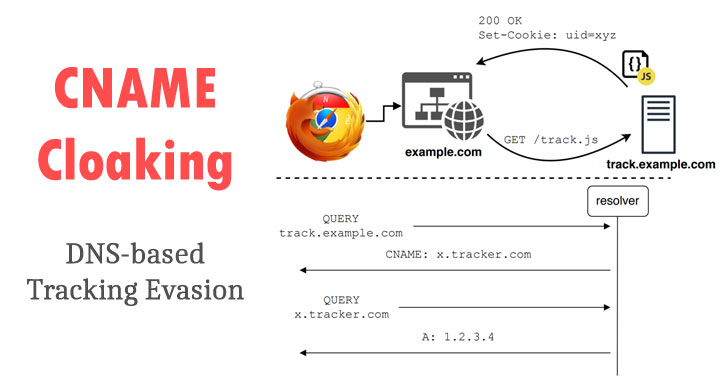

But the CNAME chaining threat is another issue. It's one I brought up explicitly two years ago as one of the harms that was likely to befall non-profits if a private equity firm was allowed to monetize the .ORG domain, so it's not a new threat, but as people are noticing, it's getting worse and worse. Mitigating it would be a bit of a game of cat-and-mouse, but not more difficult or complicated than the malware threats that analysts current identify.

~

So, to return to your question: the simplest mechanism I can think of for dealing with the CNAME chaining thing is to take a few ad-blocking and tracker-blocking feeds, resolve them all back to IP addresses, expand those IP addresses out to /24s and /48s; then watch the IP addresses that come back in answers (A and AAAA records, whether at the end of CNAME chains or not) block anything that comes back from those subnets, and wait for false-positive reports. Narrow the size of the address blocks in response to false-positives. Look at what QNAMEs produce IP addresses in those ranges, spider outwards from there.

~

And right now, there are not a lot of people working in this space. I'm sure they'll get sued and sabotaged, to an even greater degree than people doing cybersecurity analysis already do, and it'll be by folks with more resources, who don't simultaneously have to hide from the law. At least, they don't have to hide in the US. In Europe, they're criminals. In the US, they get a pass.

So, already stretched thin, it's not clear to me that this is an area that's ready for us to expend resources yet. Though I'd be really, really happy to be proven wrong, and I look forward to there being more people working on it, producing deanonymization threat feeds, in the same way that there are malware threat feeds for us to integrate right now.

We use essential cookies to make this site work, and optional cookies to enhance your experience.