Installed iPerf on a second hardwired computer so I could run two full-speed tests from different wireless clients. Here's what I found...

1) With only one client running the test, I naturally got the expected full 400-500mb/s

2) With both clients testing on the same node and same channel, speed of each was pretty much exactly cut in half... 200 - 250mb/s.

3) With both clients testing on different nodes using the same channel, speed was significantly less than half... 100-150mb/s (though, again, last night's similar test of a full-speed iPerf test running on one device with a lower-speed internet test running on the other, did not have a huge impact on either).

4) Manually changing the 5ghz channel width to 40mhz gave speeds of 200-250mb/s.

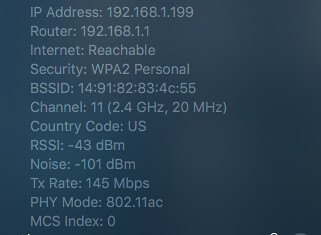

Now, one interesting note (in this test, Node A is a hardwired remote, while Node B is a wireless remote). I noticed that when the test was running on Node A (on the same 5ghz channel as Node B), I could not get my other device to connect to Node B... it insisted on either connecting to the other (free) channel of Node A or that same (free) channel on the main node even though both were further away than Node B. As soon as the test concluded on Node A, the second device readily connected to Node B. I don't know if the device itself was able to determine that there was a lot of traffic/noise (from that same channel being heavily used by a different node) so it opted for the further-away but clear channel, or if the Velop system was directing it to do so. Anyway, once I was able to get the two idle devices on the same channel/different nodes to run the test, they did remain on these channels, so whatever load balancing was taking place apparently only occurs when roaming, cycling WiFi off/on, etc., not after the connection has been established.

Anyway, I suppose if your network situation frequently includes multiple wireless clients simultaneously doing large file transfers on the LAN (or perhaps over gigabit internet), maybe using smaller channel widths (where each gets lower throughput, but more than they'd get if multiple nodes were trying to use the same channel) would yield a better overall performance. But I suspect for most households, leave it at the default is the best bet, especially considering the fact that it would probably be difficult for a change to narrower channels to also not adversely affect the backhaul.

Indeed, when I asked Linksys about manually setting my 2.4ghz channels a week or so ago, the tech said that was fine but strongly suggested that users not fiddle with the 5ghz channels (which I hadn't planned on doing anyway... "you're meddling with powers you cannot begin to comprehend"). So, once I concluded that test, all my 5ghz settings went back to auto.

And again, I'm thoroughly pleased with my wireless network's performance at these default settings. At any given time, scattered throughout our house there will be various combinations of the living room TV and three AppleTVs streaming movies from Netflix or Plex, my wife watching videos on her iMac, kids playing games and watching videos on iPads and iPhones, and I still get excellent speed. It's only when I deliberately try to overwork the network with, what for my household is an unrealistic scenario, that speed suffers noticeably.