Lynx

Senior Member

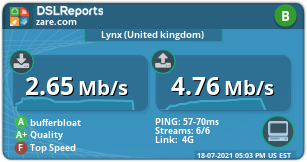

I spent some time trying to figure out why I could not control the buffer bloat on my connection using the default cake implementation in 386.2_6 until I identified that switching off my VPN (OpenVPN: NordVPN) removes the buffer bloat problem.

What is the interaction between cake and OpenVPN? Do the eth0 and ifb4eth0 interfaces still make sense when using a VPN?

What settings do I need to configure?

What is the interaction between cake and OpenVPN? Do the eth0 and ifb4eth0 interfaces still make sense when using a VPN?

Otherwise how can I get cake to properly work with NordVPN?admin@RT-AX86U-4168:/tmp/home/root# tc qdisc | grep cake

qdisc cake 8001: dev eth0 root refcnt 2 bandwidth 5120Kbit diffserv3 dual-srchost nat nowash no-ack-filter split-gso rtt 100ms noatm overhead 0

qdisc cake 8002: dev ifb4eth0 root refcnt 2 bandwidth 5120Kbit besteffort dual-dsthost nat wash ingress no-ack-filter split-gso rtt 100ms noatm overhead 0

What settings do I need to configure?